Tensorboard in Pytorch Introduction

Tensorboard是一个用于机器学习实验的可视化工具包。TensorBoard允许跟踪和可视化指标,如损失和准确性,可视化模型图,查看直方图,显示图像等,本文介绍pytorch架构下使用Tensor Board。核心原理是使用SummaryWriter()记录需要的数据(类似于Wandb的实例)并且在本地localhost进行可视化。

SummaryWriter实例创建

1

| !pip install tensorboard

|

TensorBoard可以理解成数据可视化的工具,使用时我们需要准备好记录训练时的数据,这时候就需要SummaryWriter实例

1

2

3

4

| import torch

from torch.utils.tensorboard import SummaryWriter

import datetime

writer = SummaryWriter()

|

Example: 感知机回归下的TensorBoard监控

1

2

3

4

5

6

7

8

9

10

11

12

13

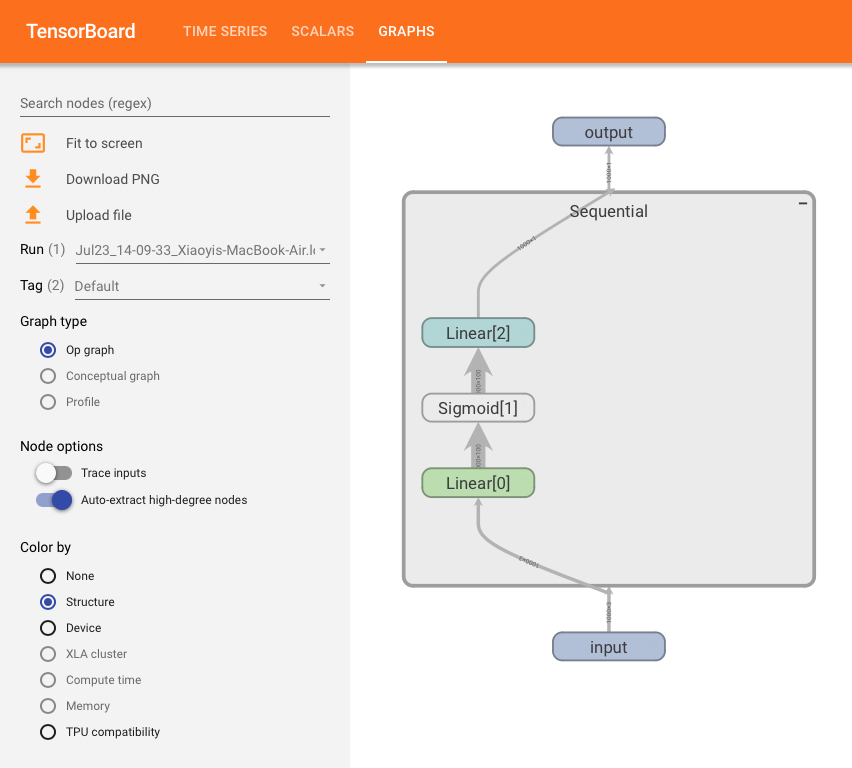

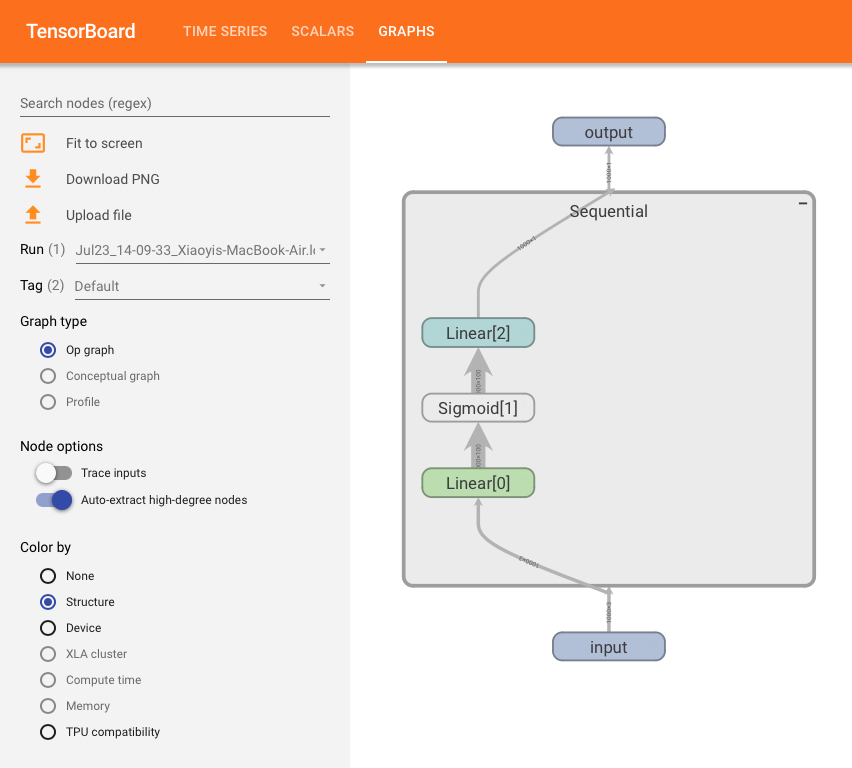

| model = torch.nn.Sequential(

torch.nn.Linear(3, 1000),

torch.nn.Sigmoid(),

torch.nn.Linear(1000, 1)

)

def init_weights(m):

if type(m) == torch.nn.Linear:

torch.nn.init.normal_(m.weight, std=0.01)

model.apply(init_weights)

model

|

Sequential(

(0): Linear(in_features=3, out_features=1000, bias=True)

(1): Sigmoid()

(2): Linear(in_features=1000, out_features=1, bias=True)

)

生成多项式数据集: $y = sin(x_1) + cos(x_2) + x_3^2$

1

2

3

4

5

6

7

8

9

10

11

12

| def generate_data(n=1000):

x = torch.rand(n, 3)

y = torch.sin(x[:, 0]) + torch.cos(x[:, 1]) + x[:, 2]**2

return x, y

x, y = generate_data()

x_val, y_val = generate_data(n=100)

data_set = torch.utils.data.TensorDataset(x, y)

train_loader = torch.utils.data.DataLoader(data_set, batch_size=16, shuffle=True)

|

使用TensorBoard查看模型结构

1

2

| input = x

writer.add_graph(model, input)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| critertion = torch.nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

num_epochs = 100

for epoch in range(1, num_epochs + 1):

for inputs, targets in train_loader:

optimizer.zero_grad()

outputs = model(inputs)

loss = critertion(outputs, targets)

loss.backward()

optimizer.step()

writer.add_scalar('train_loss', loss, epoch)

print(f'Epoch {epoch}, Loss: {loss.item()}')

with torch.no_grad():

for name, param in model.named_parameters():

writer.add_scalar(f'{name}_norm', param.norm().item(), epoch + 1)

val_hat = model(x_val)

val_loss = critertion(val_hat, y_val)

writer.add_scalar('val_loss', val_loss, epoch)

writer.close()

|

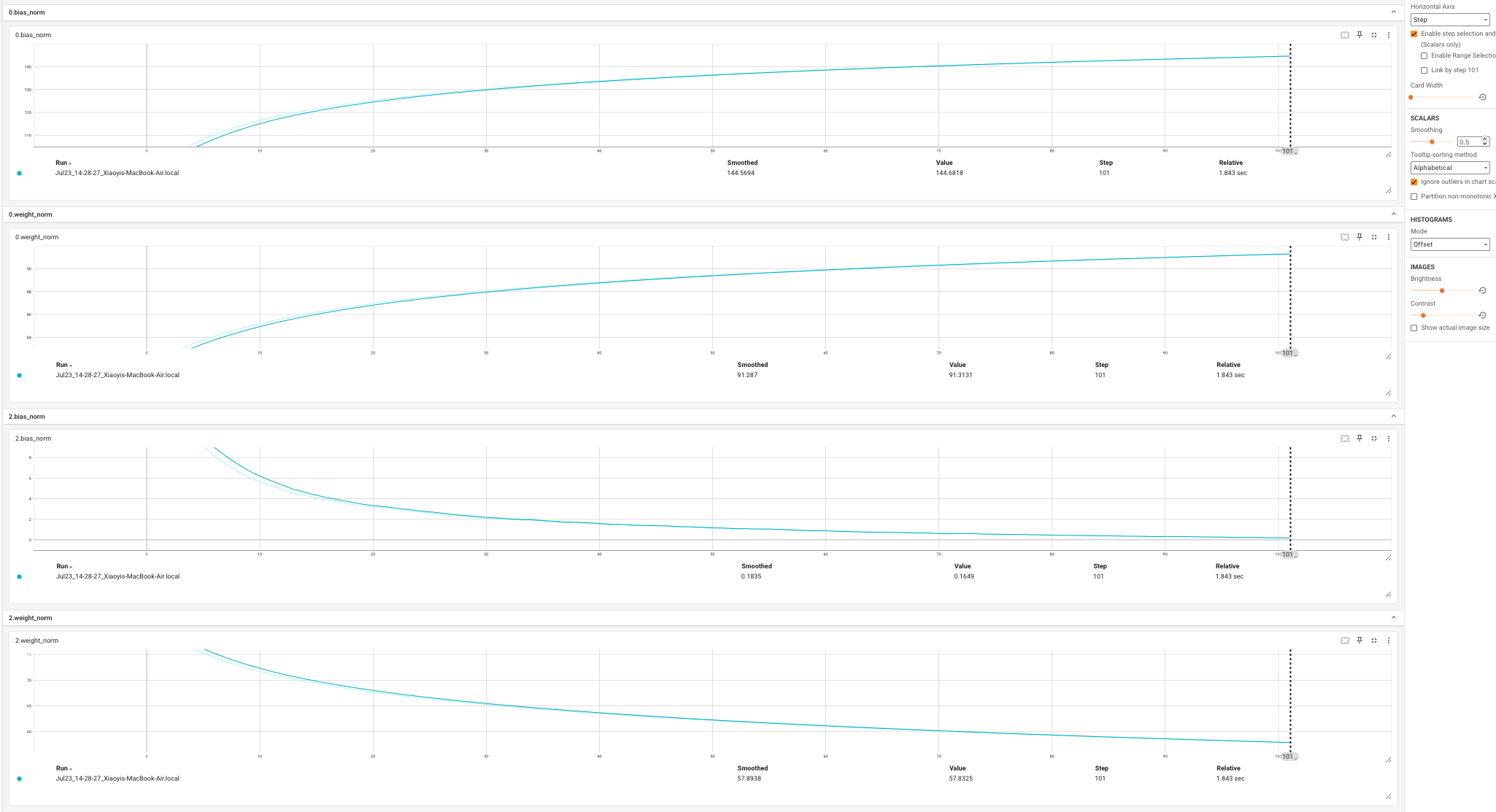

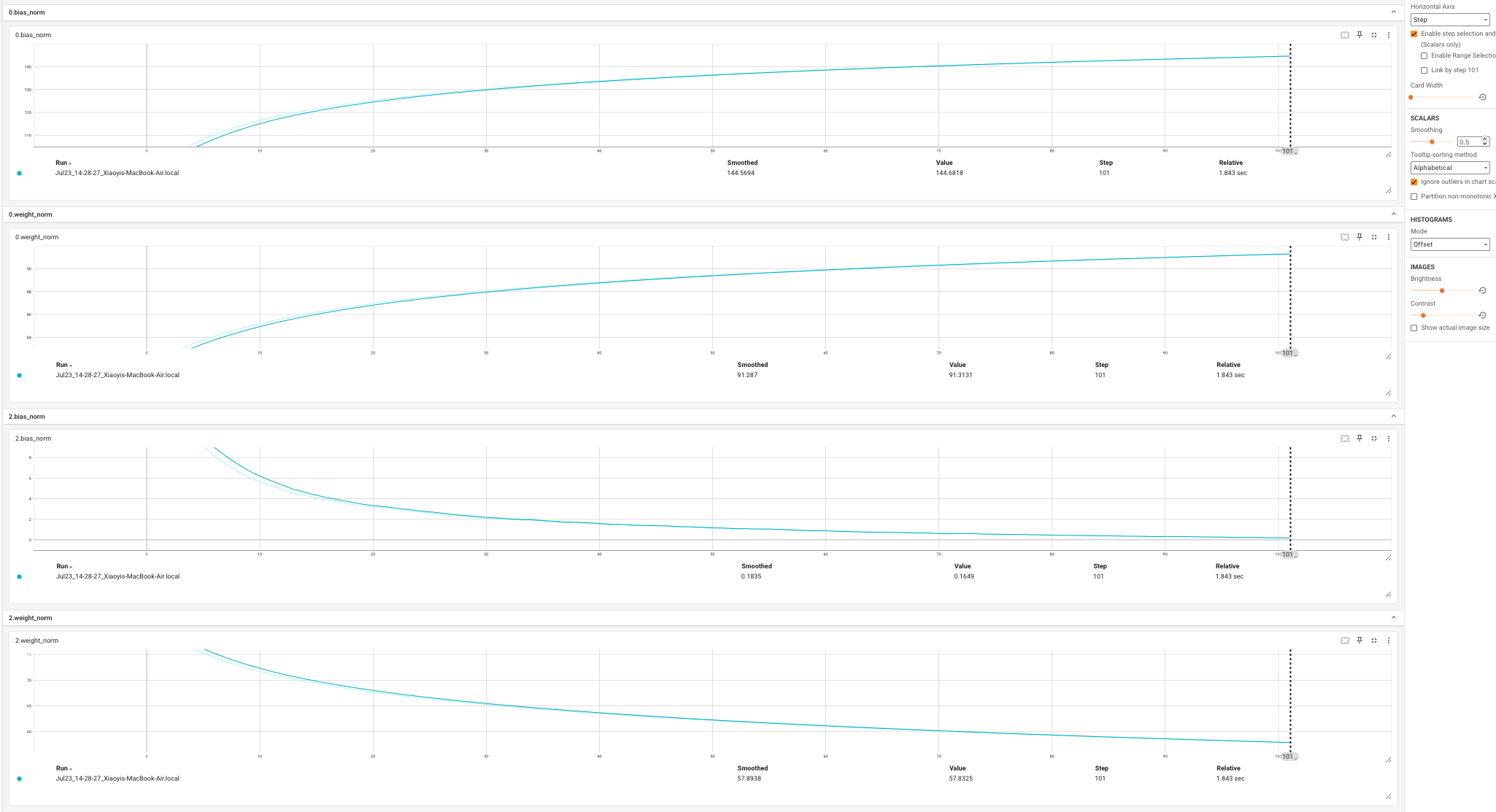

不同同激活函数下的训练过程:

Sigmoid激活函数下参数训练的范数变化:

对比Wandb与Tensorboard

- Tensorboard相比于Wandb在绘制模型结构这个功能上更加简单

- 无需联网,但是服务器有时候不稳定,经常需要刷新;需要注意log文件存放的位置以及管理

- 不太能协同工作,Wandb基本可以替代TensorBoard